How to Upload Code With Esp32 in Rasberry Pi

Introduction

When we employ a camera in a Raspberry Pi projection, we either use a CSI camera or an USB camera. These cameras need to be attached to the Raspberry Pi, and sometimes information technology can be an issue. Wouldn't it exist nifty if can add together a wireless camera to the Raspberry Pi? Some ESP32 boards have a built-in camera, and it is extremely piece of cake to become photos or stream video from an ESP32-based camera.

In this tutorial, we will learn:

- how to turn an ESP32 with a congenital-in camera into a video streaming server

- how to get the video from the ESP32 and do some processing on the video

To learn the above things, we volition modify the epitome classification program from TensorFlow Light so that it classifies the video from the ESP32 instead of the Pi Camera.

Tools and Materials

- Raspberry Pi 4

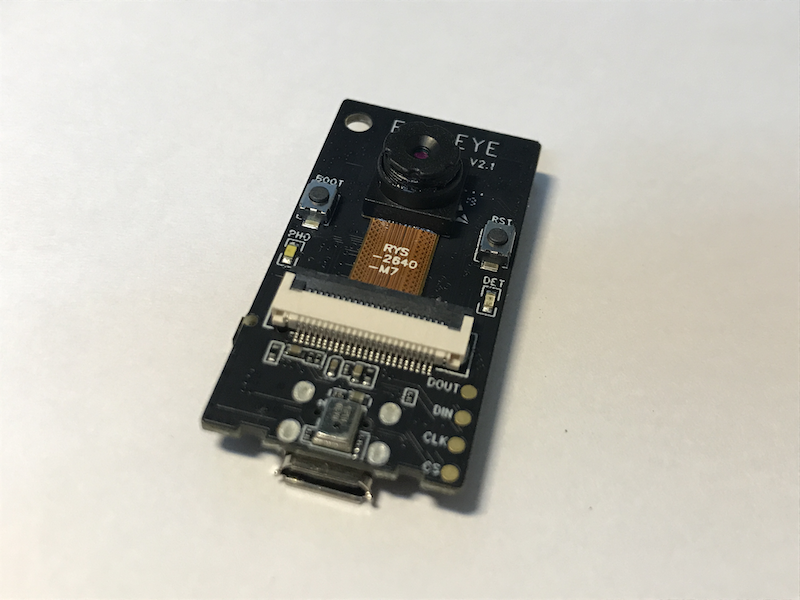

- ESP-EYE

Prerequisites

Before the tutorial, make sure you take:

- understood the basic concept of image recognition with automobile learning,

- created and activated a Python virtual environment on your Raspberry Pi,

- installed TensorFlow Low-cal runtime in the virtual environment If you haven't done them all the same, follow this tutorial to practice so.

Flash the ESP32 program

Earlier flashing the program, make certain that you have installed the Arduino core for ESP32. If yous have not done so, bank check out this tutorial to install it via the lath manager.

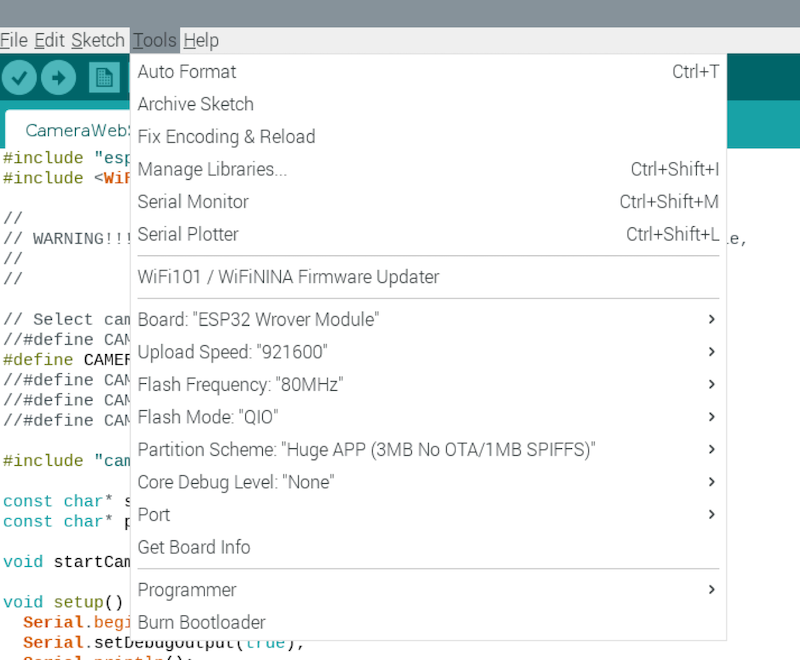

If the Arduino core for ESP32 is installed, click Tools &rarr Board &rarr ESP32 Wrover Module in the Arduino IDE. Then, click Tools → Sectionalisation Scheme → Huge APP.

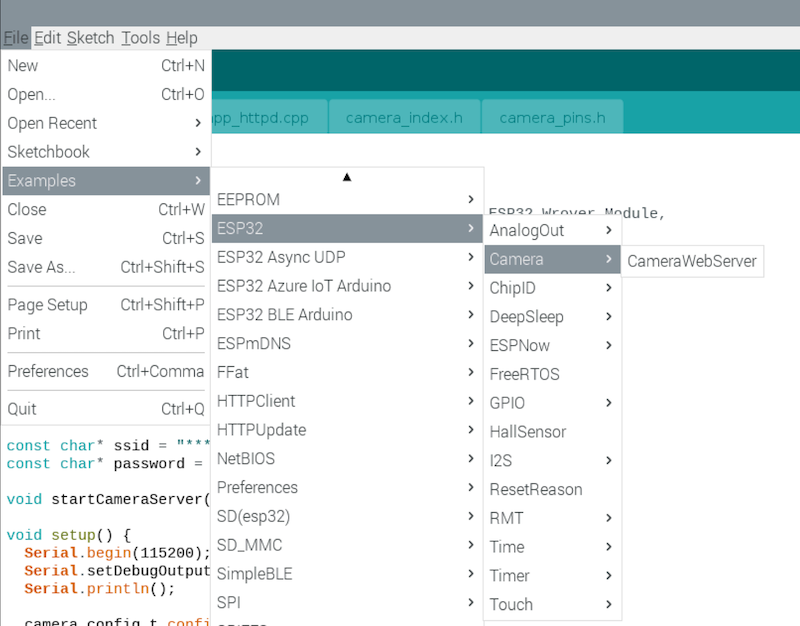

After that, we can open the Camera Web Server sketch, which is included in the Arduino core for ESP32. Click File → Examples → ESP32 → Photographic camera → CameraWebServer.s

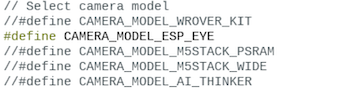

We need to select the photographic camera model at the top. Uncomment the camera model used (in our example, ESP-Middle) and comment out others.

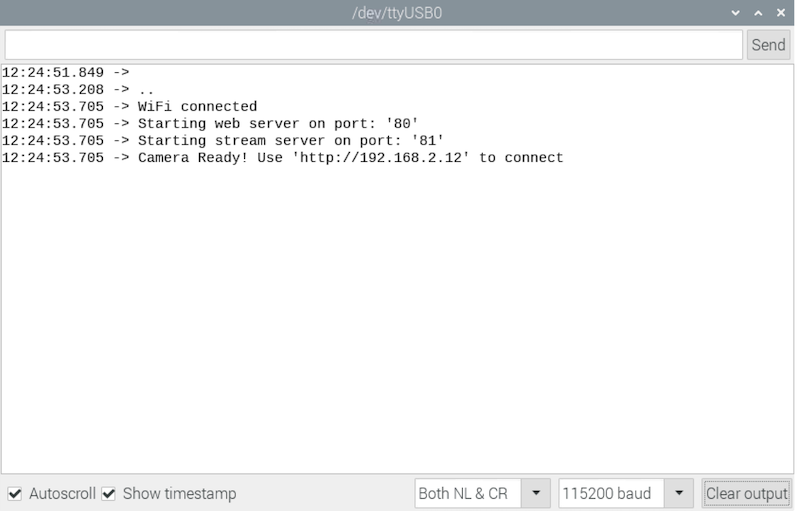

After that, change the Wifi credential inside the sketch. So, we tin upload the sketch to the ESP-EYE. When the upload is completed, we can meet the condition of the ESP-EYE inside the Serial Monitor. Note downwardly the IP address of the ESP-EYE.

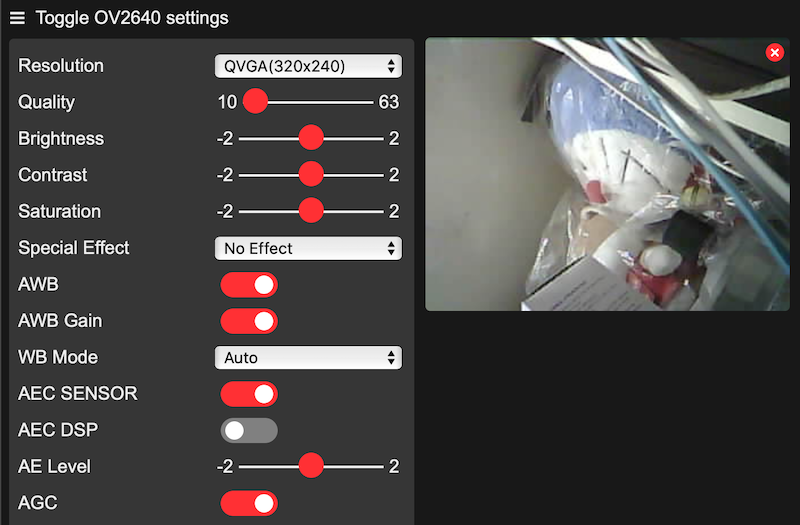

Enter the IP address of the ESP-Middle in the browser. We can now stream video from the ESP-EYE!

Annotation: Simply one customer tin exist served by the streaming server on the ESP-EYE at a time.

Install OpenCV on Raspberry Pi

It's fourth dimension to work on the Raspberry Pi. First, We need to install the dependencies of the OpenCV library. You may copy and execute the following commands one past one, or download and execute this beat out script.

(tfl) pi@raspberrypi:~/ai$ sudo apt-get install libjpeg-dev libtiff5-dev libjasper-dev libpng-dev -y (tfl) pi@raspberrypi:~/ai$ sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev libv4l-dev -y (tfl) pi@raspberrypi:~/ai$ sudo apt-become install libxvidcore-dev libx264-dev -y (tfl) pi@raspberrypi:~/ai$ sudo apt-get install libfontconfig1-dev libcairo2-dev -y (tfl) pi@raspberrypi:~/ai$ sudo apt-get install libgdk-pixbuf2.0-dev libpango1.0-dev -y (tfl) pi@raspberrypi:~/ai$ sudo apt-get install libgtk2.0-dev libgtk-3-dev -y (tfl) pi@raspberrypi:~/ai$ sudo apt-go install libatlas-base-dev gfortran -y (tfl) pi@raspberrypi:~/ai$ sudo apt-get install libhdf5-dev libhdf5-series-dev libhdf5-103 -y (tfl) pi@raspberrypi:~/ai$ sudo apt-get install libqtgui4 libqtwebkit4 libqt4-test python3-pyqt5 -y After the installation of the dependencies, nosotros tin install the OpenCV library.

(tfl) pi@raspberrypi:~/ai$ pip install opencv-contrib-python==4.1.0.25 We may run the Python interpreter and check whether the OpenCV library is installed correctly.

import cv2 impress ( cv2 . __version__ ) Finally, we install the requests library.

(tfl) pi@raspberrypi:~/ai$ pip install requests Alter the Image Classification Program from TensorFlow

Brand a copy of 'classify_picamera.py' as mentioned in this tutorial and open it. And so, we import the necessary libraries.

import requests from io import BytesIO import cv2 The requests library will send HTTP requests to the ESP-EYE and stream the video information. The video data are put together by using the BytesIO library. Finally, we use OpenCV to display the video.

Then, nosotros comment out the codes in the main function that uses the Raspberry Pi's camera.

# with picamera.PiCamera(resolution=(640, 480), framerate=30) as camera: # photographic camera.start_preview() # effort: # stream = io.BytesIO() # for _ in camera.capture_continuous( # stream, format='jpeg', use_video_port=True): # stream.seek(0) # image = Epitome.open(stream).catechumen('RGB').resize((width, elevation), # Paradigm.ANTIALIAS) # start_time = time.time() # results = classify_image(interpreter, paradigm) # elapsed_ms = (fourth dimension.time() - start_time) * 1000 # label_id, prob = results[0] # stream.seek(0) # stream.truncate() # photographic camera.annotate_text = '%south %.2f\n%.1fms' % (labels[label_id], prob, # elapsed_ms) # finally: # camera.stop_preview() After the commented codes, we beginning define a variables for the IP address of the ESP-Centre and the URL of the streaming server.

ip_addr = '192.168.two.29' stream_url = 'http://' + ip_addr + ':81/stream' Then, we create an HTTP GET request to stream the content from the ESP server.

res = requests . go ( stream_url , stream = Truthful ) Next, nosotros read the epitome data streamed from the ESP server, process it with OpenCV and make inference with TensorFlow Light.

for chunk in res . iter_content ( chunk_size = 100000 ): if len ( clamper ) > 100 : try : start_time = fourth dimension . time () img_data = BytesIO ( chunk ) cv_img = cv2 . imdecode ( np . frombuffer ( img_data . read (), np . uint8 ), 1 ) cv_resized_img = cv2 . resize ( cv_img , ( width , tiptop ), interpolation = cv2 . INTER_AREA ) results = classify_image ( interpreter , cv_resized_img ) elapsed_ms = ( time . time () - start_time ) * 1000 label_id , prob = results [ 0 ] cv2 . putText ( cv_img , f ' { labels [ label_id ] } ' , ( 0 , 25 ), cv2 . FONT_HERSHEY_SIMPLEX , 0.five , ( 255 , 0 , 255 ), 2 ) cv2 . putText ( cv_img , f ' { prob } ' , ( 0 , 50 ), cv2 . FONT_HERSHEY_SIMPLEX , 0.five , ( 255 , 0 , 255 ), 2 ) cv2 . imshow ( "OpenCV" , cv_img ) cv2 . waitKey ( 1 ) print ( f 'elapsed_ms: { elapsed_ms } ' ) except Exception equally e : print ( due east ) continue Permit'southward explore the important parts of the above lawmaking .

There are some meta-information in the streaming information. We prepare a threshold of 100 byte to differentiate the meta-data from the image data:

Then, the ByteIO library is used to turn the streamed data into a byte assortment that OpenCV can read:

img_data = BytesIO ( clamper ) Next, we turn the raw byte data into an OpenCV prototype and resize it:

cv_img = cv2 . imdecode ( np . frombuffer ( img_data . read (), np . uint8 ), 1 ) cv_resized_img = cv2 . resize ( cv_img , ( width , pinnacle ), interpolation = cv2 . INTER_AREA ) Similar to the original code inside classify_picamera.py, we use TensorFlow Light to make inference with the image:

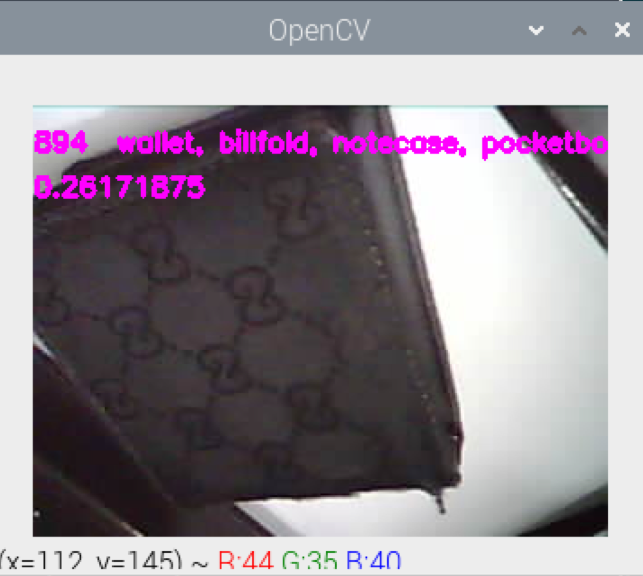

results = classify_image ( interpreter , cv_resized_img ) label_id , prob = results [ 0 ] Finally, we add labels to the image and display it:

cv2 . putText ( cv_img , f ' { labels [ label_id ] } ' , ( 0 , 25 ), cv2 . FONT_HERSHEY_SIMPLEX , 0.five , ( 255 , 0 , 255 ), ii ) cv2 . putText ( cv_img , f ' { prob } ' , ( 0 , 50 ), cv2 . FONT_HERSHEY_SIMPLEX , 0.5 , ( 255 , 0 , 255 ), 2 ) cv2 . imshow ( "OpenCV" , cv_img ) cv2 . waitKey ( 1 ) Run the Python program, and we can see the Raspberry Pi practice image classification on the video from the ESP32!

Yous may notice the sample code on Github.

Conclusion and Consignment

ESP32-based cameras tin can add a lot of flexibility to AI projects. For instance, we may stream videos from multiple ESP32 cameras to a more than powerful figurer and perform AI inference. Also, ESP32 is a lot smaller, cheaper and consumes less ability, information technology's more suitable for projects similar RC cars.

As a practice, you lot may do the aforementioned modification on detect_picamera.py and use the video from ESP32 for object detection.

Source: https://gpiocc.github.io/learn/raspberrypi/esp/ml/2020/11/08/martin-ku-stream-video-from-esp32-to-raspberry-pi.html

0 Response to "How to Upload Code With Esp32 in Rasberry Pi"

Post a Comment